Doing DigiPres with Windows

A couple of times at NYU, a new student would ask me what kind of laptop I would recommend for courses and professional use in video and digital preservation. Maybe their personal one had just died and they were shopping for something new. Often they'd used both Mac and Windows in the past so were generally comfortable with either.

I was and still am conflicted by this question. There are essentially three options here: 1) some flavor of MacBook, 2) a PC running Windows, or 3) a PC running some flavor of Linux. (There are Chromebooks as well, but given their focus on cloud applications and lack of a robust desktop environment, I wouldn't particularly recommend them for archivists or students looking for flexibility in professional and personal use)

Each of these options have their drawbacks. MacBooks are and always have been prohibitively expensive for many people, and I'm generally on board with calling those "butterfly switch" keyboards on recent models a crime. Though a Linux distribution like Ubuntu or Linux Mint is my actual recommendation and personally preferred option, it remains unfamiliar to the vast majority of casual users (though Android and ChromeOS are maybe opening the window), and difficult to recommend without going full FOSS evangelist on someone who is largely just looking to make small talk (I'll write this post another day).

So I never want to steer people away from the very real affordability benefits of PC/Windows - yet feel guilty knowing that's angling them full tilt against the grain of Unix-like environments (Mac/Linux) that seem to dominate digital preservation tutorials and education. It makes them that student or that person in a workshop desperately trying in vain to follow along with Bash commands or muck around in /usr/local while the instructor guiltily confronts the trolley problem of spending their time saving the one or the many.

I also recognize that *nix education, while the generally preferred option for the #digipres crowd, leaves a gaping hole in many archivists' understanding of computing environments. PC/Windows remains the norm in a large number of enterprise/institutional workplaces. Teaching ffmpeg is great, but do we leave students stranded when they step into a workplace and can't install command line programs without Homebrew (or don't have admin privileges to install new programs at all)?

This post is intended as a mea culpa for some of these oversights by providing an overview of some fundamental concepts of working with Windows beyond the desktop environment - with an eye towards echoing the Windows equivalents to usual digital preservation introductions (the command line, scripting, file system, etc.).

*nix vs NT

To start, let's take a moment to consider how we got here - why are Mac/Linux systems so different from Windows, and why do they tend to dominate digital preservation conversations?

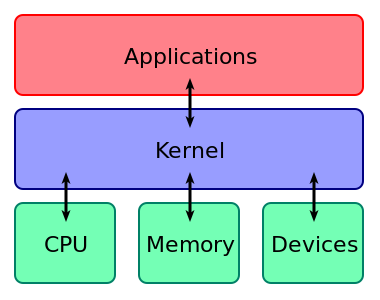

Every operating system (whether macOS, Windows, a Linux distribution, etc.) is actually a combination of various applications and a kernel. The kernel is the core part of the OS - it handles the critical task of translating all the requests you as a user make in software/applications to the hardware (CPU, RAM, drives, monitor, peripherals, etc.) that actually perform/execute the request.

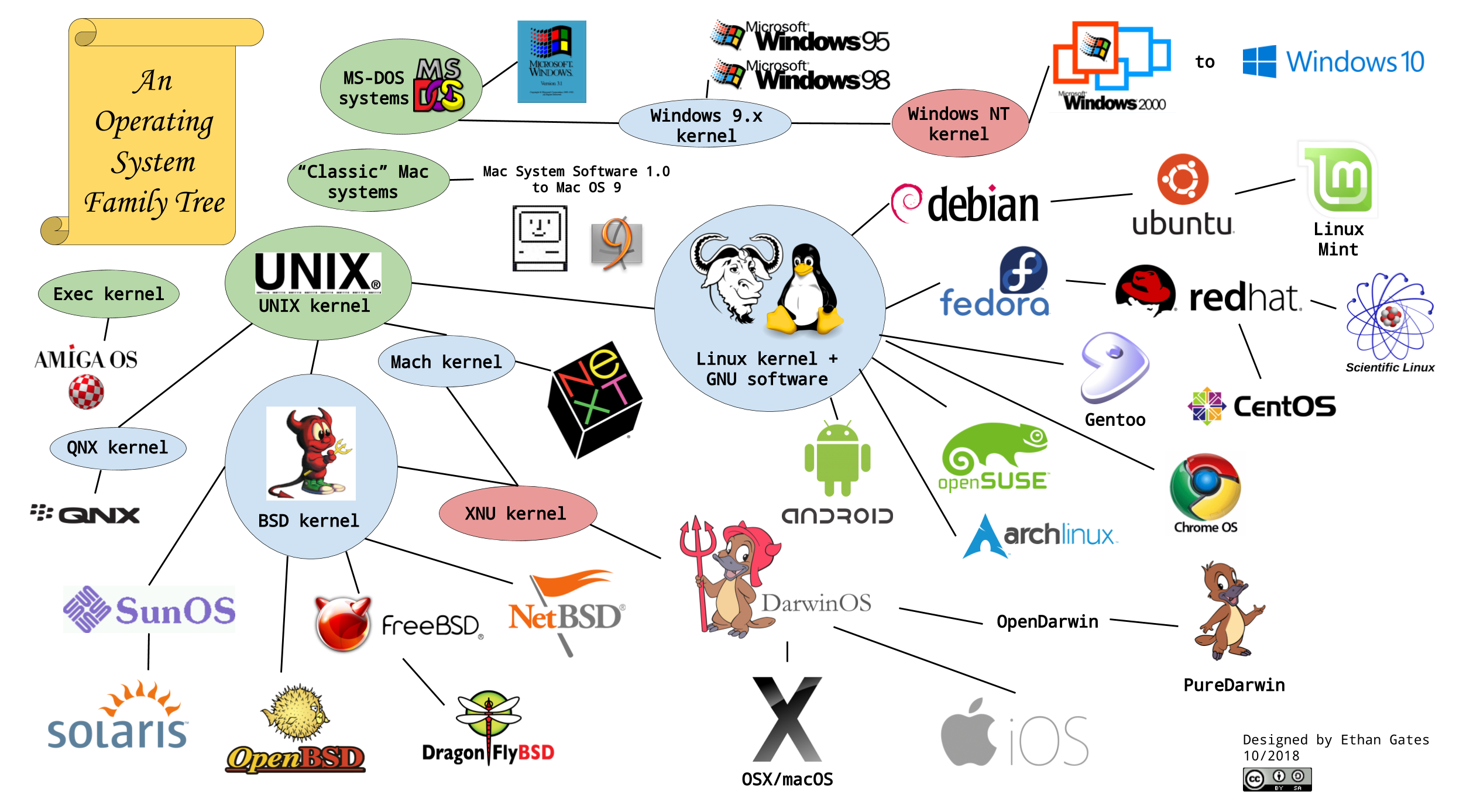

UNIX was a complete operating system created by Bell Labs in 1969 - but it was originally distributed with full source code, meaning others could see exactly how UNIX worked and develop their own modifications. There is a whole complex web of UNIX offshoots that emerged in the '80s and early '90s, but the upshot is that a lot of people took UNIX's kernel and used it as the base for a number of other kernels and operating systems, including modern-day macOS and Linux distributions. (The modifications to the kernel mean that these operating systems, while originally derived in some way from UNIX, are not technically UNIX itself - hence you will see these systems referred to as "Unix-like" or "*nix")

The result of this shared lineage is that Mac and Linux operating systems are similar to each other in many fundamental ways, which in turn makes cross-compatible software relatively easy to create. They share a basic file system structure and usually a default terminal and programming language (Bash) for doing command line work, for instance.

Meanwhile there was Microsoft, which, though it originally created its own (phenomenally popular!) variant of UNIX in the '80s, switched over in the late '80s/early '90s into developing its own, proprietary kernel, entirely separate from UNIX. This was the NT kernel, which is at the heart of basically every Windows operating system ever made. These fundamental differences in architecture (affecting not just clearly visible characteristics like file systems/naming and command line work, but extremely low-level methods of how software communicates with hardware) make it more difficult to make software cross-compatible between both *nix and Windows operating systems without a bunch of time and effort from programmers.

So, given the choice between these two major branches in computing, why this appearance that Mac hardware and operating systems have won out for digital preservation tasks, despite being the more expensive option for constantly cash-strapped cultural institutions and employees?

It seems to me it has very little to do with an actual affinity for Macs/Apple and everything to do with Linux and GNU and open source software. Unlike either macOS or Windows, Linux systems are completely open source - meaning any software developer can look at the source code for Linux operating systems and thus both design and make available software that works really well for them without jumping through any proprietary hoops (like the App Store or Windows Store). It just so happens that Macs, because at their core they are also Unix-like, can run a lot of the same, extremely useful software, with minimal to no effort. So a lot of the software that makes digital preservation easier was created for Unix-like environments, and we wind up recommending/teaching on Macs because given the choice between the macOS/Windows monoliths, Macs give us Bash and GNU tools and other critical pieces that make our jobs less frustrating.

Microsoft, at least in the '90s and early aughts, didn't make as much of an effort to appeal directly to developers (who, very very broadly speaking, value independence and the ability to do their own, unique thing to make themselves or their company stand out) - they made their rampant success on enterprise-level business, selling Windows as an operating system that could be managed en masse as the desktop environment for entire offices and institutions with less troubleshooting of individual machines/setups.

(Also key is that Microsoft kept their systems cheaper by never getting into the game of hardware manufacturing - unlike Mac operating systems, Windows is meant to run on third-party hardware made by companies like HP, Dell, IBM, etc. The competition between these manufacturers keeps prices down, unlike Apple's all-in-one hardware + OS systems, making them the cheaper option for businesses opening up a new office to buy a lot of computers all at once. Capitalism!)

As I'll get into soon, there have been some very intriguing reversals to this dynamic in recent years. But, in summary: the reason Macs have seemed to dominate digital preservation workshops and education is that there was software that made our jobs easier. It was never that you can't do these things with Windows.

Windows File System

Beyond the superficial desktop design differences (dock vs. panel, icons), the first thing any user moving between macOS and Windows probably notices are the differences between Finder (Mac) and File Explorer (Windows) in navigating between files and folders.

In *nix systems, all data storage devices - hard disk drives, flash drives, external discs or floppies, or partitions on those devices - are mounted into the same, "root" file system as the operating system itself, in Windows every single storage device or partition is separated into its own "drive" (I use quotes because two partitions might exist on the same physical hard disk drive, but are identified by Windows as separate drives for the purpose of saving/working with files), identified by a letter - C:, D:, G:, etc. Usually, the Windows operating system itself, and probably most of a casual user's files, are saved on to the default C: drive. (Why start at C? It harkens back to the days of floppy drives and disk operating systems, but that's a story for another post). From that point, any additional drives, disks or partitions are assigned another letter (if for some reason you need more than 26 drives or partitions mounted, you'll have to resort to some trickery!)

Also, file paths in Windows use backslashes (e.g. C:\Users\Ethan\Desktop) instead of forward slashes (/Users/Ethan/Desktop)

and if you're doing command-line work, Bash (the default macOS CLI) is case-sensitive (meaning /Users/Ethan/Desktop/sample.txt and /Users/Ethan/Desktop/SAMPLE.txt are different files) while Windows' DOS/PowerShell command line interfaces (more on these in a minute) are not (so SAMPLE.txt would overwrite sample.txt)

¯\_(ツ)_/¯

Fundamentally, I don't think these minor differences affect the difficulty either way of performing common tasks like navigating directories in the command line or scripting from Macs - it's just different, and an important thing to keep in mind to remember where your files live.

What DOES make things more difficult is when you start trying to move files between macOS and Windows file systems. You've probably encountered issues when trying to connect external hard drives formatted for one file system to a computer formatted for another. Though there's a lot of programming differences between file systems that cause these issues (logic, security, file sizes, etc.), you can see the issue just with the minor, user-visible differences just mentioned: if a file lives at E:\DRIVE\file.txt on a Windows-formatted external drive, how would a computer running macOS even understand where to find that file?

Modern Windows systems/drives are formatted with a file system called NTFS (for many years Microsoft used FAT and its variants such as FAT16 and FAT32, so NTFS is backwards-compatible with these legacy file systems). Apple, meanwhile, is in a moment transitioning from its very longtime system HFS+ (which you may have also seen referred to in software as "Mac OS Extended") to a new file system, APFS.

File system transitions tend to be a headache for users. NTFS and HFS+ have at least been around for long enough that quite a lot of software has been made to allow moving back and forth/between the two file systems (if you are on Windows, HFS Explorer is a great free program for exploring and extracting files on Mac-formatted drives; while on macOS, the NTFS-3G driver allows for similarly reading and writing from Windows-formatted storage). Since it is still pretty new, I am less aware of options for reading APFS volumes on Windows, but will happily correct that if anyone has recommendations!

** I've got a lot of questions in the past about the exFAT file system, which is theoretically cross-compatible with both Mac and Windows systems. I personally have had great success with formatting external hard drives with exFAT, but have also heard a number of horror stories from those who have tried to use it and been unable to mount the drive into one or another OS. Anecdotally it seems that drives originally formatted exFAT on a Windows computer have better success than drives formatted exFAT on a Mac, but I don't know enough to say why that might be the case. So: proceed, but with caution!

Executable Files

No matter what operating system you're using, executable files are pieces of code that directly instruct the computer to perform a task (as opposed to any other kind of data file, whose information usually needs to be parsed/interpreted by executable software in some way to become in any way meaningful - like a word processing application opening a text file, where the application is the executable and the text file is the data file). As a common user, anytime you double-clicked on a file to open an application or run a script, whether a GUI or a command line program, you ran an executable.

Where executable files live and how they're identified to you, the user, varies a bit from one operating system to the next. A MacOS user is accustomed to launching installed programs from the Applications folder, which are all actually .app files (.apps in turn are actually secret "bundles" that contain executables and other data files inside the .app, which you can view like a folder if you right-click and select "Open Package Contents" on an application).

Executable files (with or without a file extension) might also be automatically identified by macOS anywhere and labeled with this icon:

And finally, because executable files are also called binaries, you might find executables in a folder labeled "bin" (for instance, Homebrew puts the executable files for programs it installs into /usr/local/bin)

Windows-compatible executables are almost always identified with .exe file extensions. Applications that come with an installer (or, say, from the Windows Store) tend to put these in directories identified by the application name in the "Program Files" folder(s) by default. But some applications you just download off of GitHub or SourceForge or anywhere else on the internet might just put them in a "bin" folder or somewhere else.

(Note: script files, which are often identified by an extension according to the programming language they were written in - .py files for Python, .rb for Ruby, .sh for Bash, etc. - can usually be made automatically executable like an .exe but are not necessarily by default. If you download a script, whether working on Mac or Windows, you may need to check whether it's automatically come to you as an executable or more steps need to be taken. They often don't, because downloading executables is a big no-no from the perspective of malware protection.)

Command Prompt vs. PowerShell

On macOS, we usually do command line work (which offers powerful tools for automation, cool/flexible software, etc.) using the Terminal application and Bash shell/programming language.

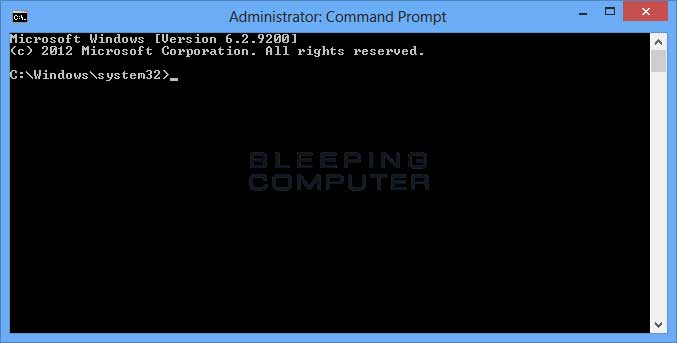

Modern Windows operating systems are a little more confusing because they actually offer two command line interfaces by default: Command Prompt and PowerShell.

The Command Prompt application uses DOS commands and has essentially not changed since the days of MS-DOS, Microsoft's pre-Windows operating system that was entirely accessed/used via command line (in fact, early Windows operating systems like Windows 3.1 and Windows 95 were to a large degree just graphic user interfaces built on top of MS-DOS).

The stagnation of Command Prompt/DOS was another reason developers started migrating over to Linux and Bash - to take advantage of more advanced/convenient features for CLI work and scripting. In 2006, Microsoft introduced PowerShell as a more contemporary, powerful command line interface and programming language aimed at developers in an attempt to win some of them back.

While I recognize that PowerShell can do a lot more and make automation/scripting way easier, I still tend to stick with Command Prompt/DOS when doing or teaching command line work in Windows. Why? Because I'm lazy.

The Command Prompt/DOS interface and commands were written around the same time as the original Bash - so even though development continued on Bash while Command Prompt stayed the same, a lot of the most basic commands and syntax remained analogous. For instance, the command for making a directory is the same in both Terminal and Command Prompt ("mkdir"), even if the specific file paths are written out differently.

So for me, while learning Command Prompt is a matter of tweaking some commands and knowledge that I already know because of Bash, learning PowerShell is like learning a whole new language - making a directory in PowerShell, e.g., is done with the command shortcut "md".* It's sort of like saying I already know Castillian Spanish, so I can tweak that to figure out a regional dialect or accent - but learning Portuguese would be a whole other course.

But your mileage on which of those things is easier may vary! Perhaps it's more clear to keep your *nix and Windows knowledge totally separate and take advantage of PowerShell scripting. But this is just to explain the Command Prompt examples I may use for the remaining of this post.

* ok, yes, "mkdir" will also work in PowerShell because it's the cmdlet name - this comparison doesn't work so well with the basic stuff, but it gets true for more advanced functions/commands and you know it, Mx. Know-It-All

The PATH Variable

The PATH system variable is a fun bit of computing knowledge that hopefully ties together all the three previous topics we've just covered!

Every modern operating system has a default PATH variable set when you start it up (system variables are basically configuration settings that, as their name implies, you can update or change). PATH determines where your command line interface looks for executable files/binaries to use as commands.

Here's an example back on macOS. Many of the binaries you know and execute as commands (cd, mkdir, echo, rm) live in /bin or /usr/bin in the file system. By default, the PATH variable is set as a list that contains both "/bin" and "/usr/bin" - so that when you type

$ cd /my/home/directory

into Terminal, the computer knows to look in /bin and /usr/bin to find the executable file that contains instructions for running the "cd" command.

Package managers, like Homebrew, usually put all the executables for the programs you install into one folder (in Homebrew's case, /usr/local/bin) and ALSO automatically update the PATH variable so that /usr/local/bin is added to the PATH list. If Homebrew put, let's say, ffmpeg's executable file into the /usr/local/bin folder, but never updated the PATH variable, your Terminal wouldn't know where to find ffmpeg (and would just return a "command not found" error if you ran any command starting with $ ffmpeg, even though it *is* somewhere on your computer.

So let's move over to Windows: because Command Prompt, by default, does not have a package manager, if you download a command line program like ffmpeg, Command Prompt will not automatically know where to find that command - unless a) you move the ffmpeg directory, executable file included, into somewhere already in your PATH list (maybe somewhere like "C:\Program Files\"), or b) update the PATH list to include where you've put the ffmpeg directory.

I personally work with Windows rarely enough that I tend to just add a command line program's directory to the PATH variable when needed - which usually requires rebooting the computer. So I can see how if you were adding/installing command line programs for Windows more frequently, it would probably be convenient to just create/determine "here's my folder of command line executables", update the PATH variable once, and then just put new downloaded executables in that folder from then on.

*** I do NOT recommend putting your Downloads or Documents folder, or any user directory that you can access/change files in without admin privileges, into your PATH!!! It's an easy trick for accidentally-downloaded malware to get into a commonly-accessed folder (and/or one that doesn't require raised/administrative privileges) and then be able to run its executable files from there without you ever knowing because it's in your PATH.

Updating the PATH variable was a pain in the ass in Windows 7 and 8 but is much less so now on Windows 10.

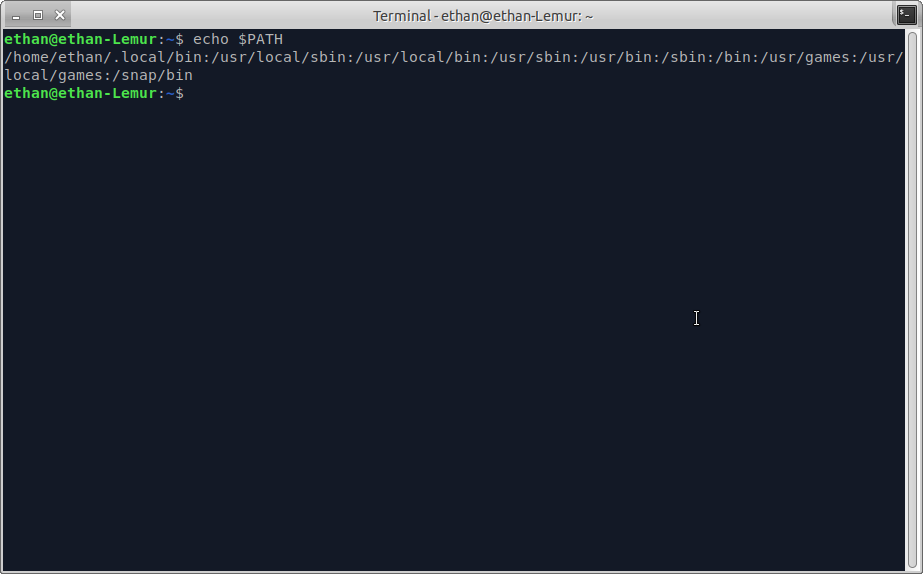

If you are in the command line and want to quickly troubleshoot what file paths/directories are and aren't in your PATH, you can use the "echo" command, which is available on both *nix and Windows systems. In *nix/Bash, variables can be invoked in scripts or changed with $PATH, so

$ echo $PATH

would return something like so, with the different file paths in the PATH list separated by colons:

While on Windows, variables are identified like %THIS%, so you would run

> echo %PATH%

Package Management

Now, as I said above, package managers like Homebrew on macOS can usually handle updating/maintaining the PATH variable so you don't have to do it by hand. For some time, there wasn't any equivalent command line package manager (at least that I was aware of) for Windows, so there wasn't any choice in the matter.

That's no longer true! Now there's Chocolatey, a package manager that will work with either Command Prompt or PowerShell. (Note: Chocolatey *uses* PowerShell in the background to do its business, so even though you can install and use Chocolatey with Command Prompt, PowerShell still needs to be on your computer. As long as your computer/OS is newer than 2006, this shouldn't be an issue).

Users of Homebrew will generally be right at home with Chocolatey: instead of $ brew install [package-name]you can just type > choco install [package-name] and Chocolatey will do the work of downloading the program and putting the executable in your PATH, so you can get right back to command line work with your new program. It works whether you're on Windows 7 or 10.

The drawback, at least if you're coming over from using macOS and Homebrew, is that Chocolatey is much more limited in the number/type of packages it offers. That is, Chocolatey still needs Windows-compatible software to offer, and as we went over before, some *nix software has just never been ported over to supported Windows versions (or at least, into Chocolatey packages). But many of the apps/commands you probably know and love as a media archivist: ffmpeg, youtube-dl, mediainfo, exiftool, etc. - are there!

Scripting

If you've been doing introductory digipres stuff, you've probably dipped your toes into Bash scripting - which is just the idea of writing some code written in Bash into a plain text file, containing instructions for the Terminal. Your computer can then run the instructions in the script without you needing to type out all the commands into Terminal by hand. This is obviously most useful for automating repetitive tasks, where you might want to run the same set of commands over and over again on different directories or sets of files, without constant supervising and re-typing/remembering commands. Scripts written in Bash and intended for Mac/Linux Terminals tend to be identified with ".sh" file extensions (though not exclusively).

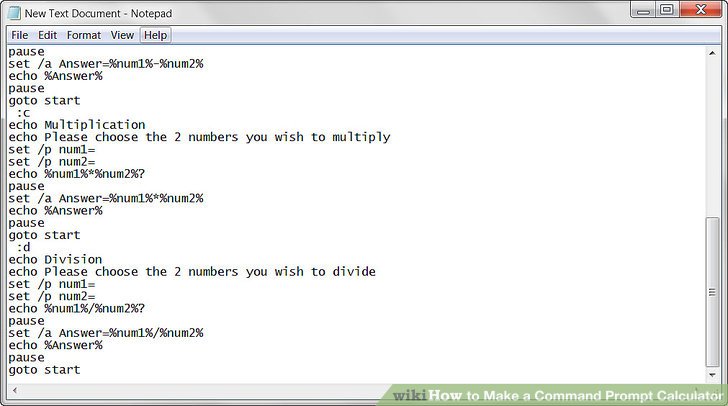

Scripting is absolutely still possible with Windows, but we have to adjust for the fact that the Windows command line interface doesn't speak Bash. Command Prompt scripts are written in DOS style, and their benefit for Windows systems (over, say, PowerShell scripts) is that they are extremely portable - it doesn't much matter what version of Windows or PowerShell you're using.

Command Prompt scripts are often called "batch files" and can usually be identified by two different file extensions: .bat or .cmd

(The difference between the two extensions/file formats is negligible on a modern operating system like Windows 7 or 10. Basically, BAT files were used with MS-DOS, and CMD files were introduced with Windows NT operating systems to account for the slight improvements from the MS-DOS command line to NT's Command Prompt. But, NT/Command Prompt remained backwards-compatible with MS-DOS/BAT files, so you would only ever encounter issues if you tried to run a CMD file on MS-DOS, which, why are you doing that?)

Actually writing Windows batch scripts for Command Prompt is its own whole tutorial. Which - thankfully - I don't have to write, because you can follow this terrific one instead!

Because Command Prompt batch scripts are still so heavily rooted in decades-old strategies (DOS) for talking to computers, they are pretty awkward to look at and understand.

A lot of software developers skip right to a more advanced/human-readable programming language like Python or Ruby. Scripts in these languages do also have the benefit of being cross-platform, since Python, for example, can be installed on either Mac or Windows. But Windows OS will not understand them by default, so you have to go install them and set up a development environment rather than start scripting from scratch as you can with BAT/CMD files.

Windows Registry

The Windows Registry is fascinating (to me!). Whereas in a *nix operating system, critical system-wide configuration settings are contained as text in files (usually high up under the "root" level of your computer's file system, where you need special elevated permissions to access and change them), in Windows these settings (beyond the usual, low-level user settings you can change in the Settings app or Control Panel) are stored in what's called the Windows Registry.

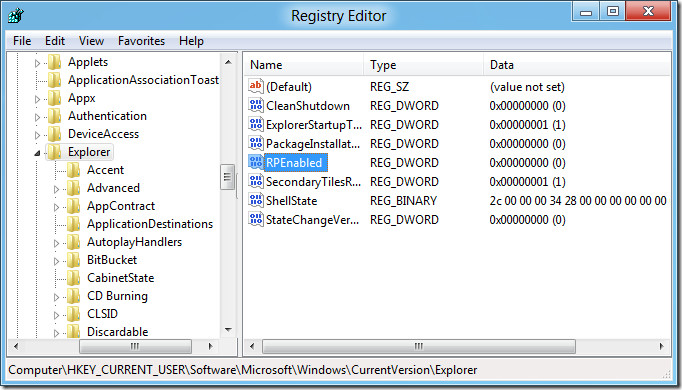

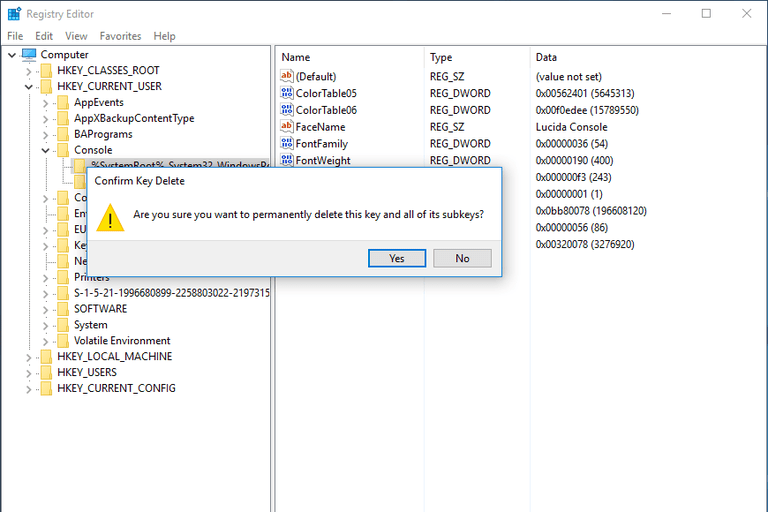

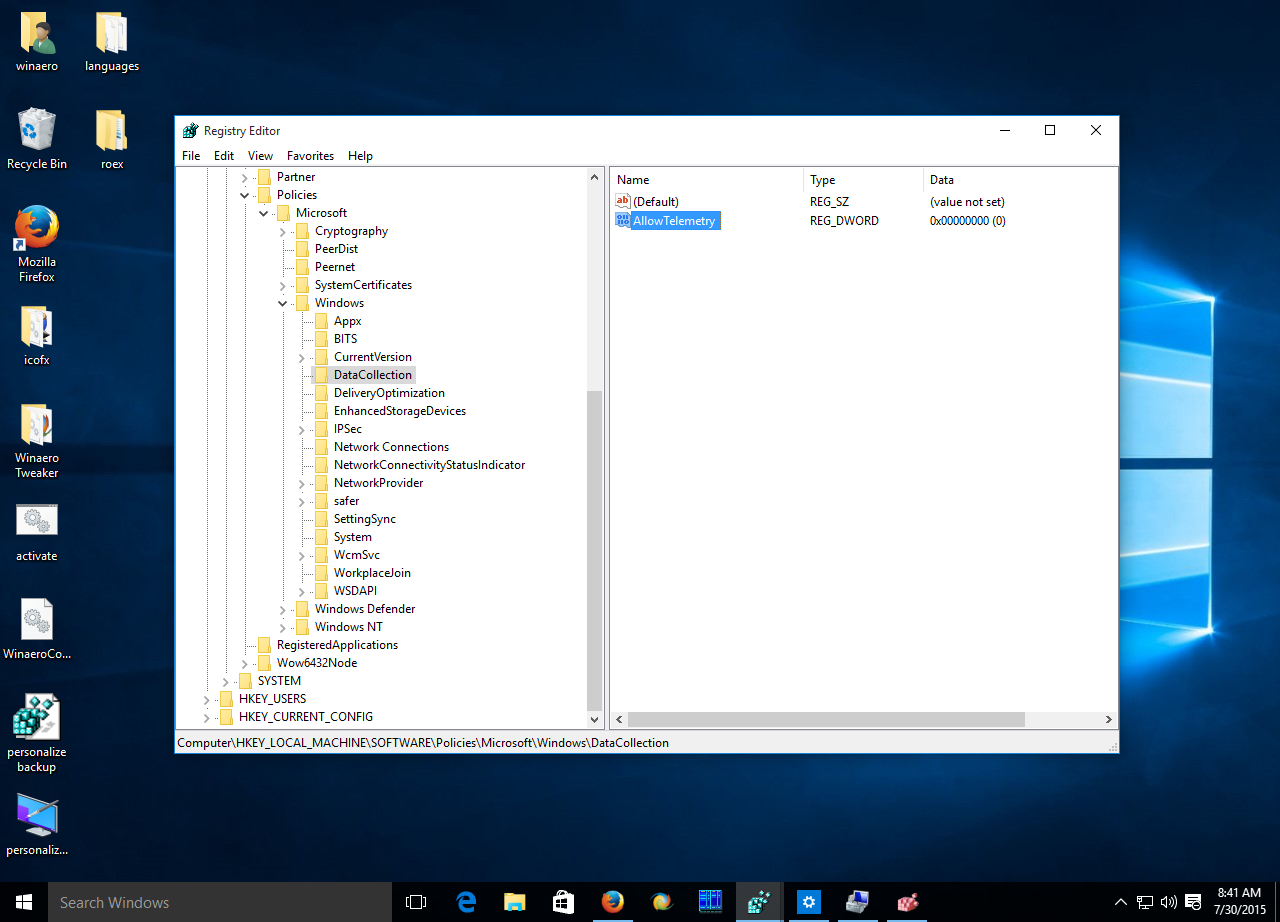

The Windows Registry is a database where configuration settings are stored, not as text in files, but as "registry values" - pieces or strings of data that store instructions. These values are stored in "keys" (folders), which are themselves stored in "hives" (larger folders that categorize the keys within related subfolders).

Registry values can be a number of different things - text, a string of characters representing hex code, a boolean value, and more. Which kind of data they are, and what you can acceptably change them to, depends entirely on the specific setting they are meant to control. However, values and keys are also often super abstracted from the settings they change - meaning it can be very very hard to tell what exactly a registry value can change or control just by looking at it.

For that reason, casual users are generally advised to stay out of the Windows Registry altogether, and those who are going to change something are advised to make a backup of their Registry before making edits, so they can restore it if anything goes wrong.

Just as you may never mess around in your "/etc" folder on a Mac, even as a digitally-minded archivist, you may or may not ever need to mess in the Windows Registry! I've only poked around in it to do things like removing that damn OneDrive tab from the side of my File Explorer windows so I stop accidentally clicking on it. But I thought I'd mention it since it's a pretty big difference in how the Windows operating and file systems work.

How to Cheat: *nix on Windows

OK. So ALL OF THIS has been based on getting more familiar with Windows and working with it as-is, in its quirky not-Unix-like glory. I wanted to write these things out, because to me, understanding the differences between the major operating systems helped me get a better handle on how computers work in general.

But guess what? You could skip all that. Because there are totally ways to just follow along with *nix-based, Bash-centric digital preservation education on Windows, and get everyone on the same page.

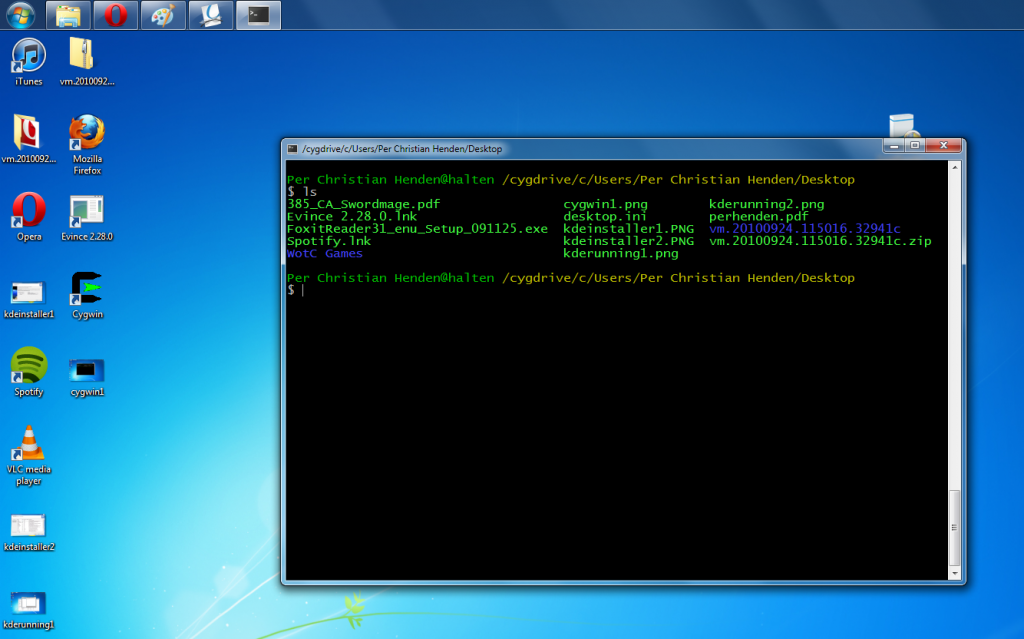

On Windows 7, there were several programs that would port a Bash-like command line environment and a number of Linux/GNU utilities to work in Windows - Cygwin probaby being the most popular. Crucially, this was not a way to run Linux/Unix-like applications on Windows - it was just a way of doing the most basic tasks of navigating and manipulating files on your Windows computer in a *nix-like way, with Bash commands (the software essentially translated them into DOS/Windows commands behind-the-scenes).

But a way way more robust boon for cross-compatibility was the introduction of the Windows Subsystem for Linux in Windows 10. Microsoft, for many years the fiercest commercial opponent of open source software/systems, has in the last ten years or so increasingly been embracing the open source community (probably seeing how Linux took over the web and making a long-term bet to win back the business of software/web developers).

The WSL is a complete compatibility layer for, in essence, installing Linux operating systems like Ubuntu or or Kali or openSUSE *inside* a Windows operating system. Though it still has its own limitations, this basically means that you can run a Bash terminal, install Linux software, and navigate around your computer just as you would on a *nix-only system - from inside Windows.

The major drawback from a teaching perspective: it is still a Linux operating system Windows users will be working with (I'd personally recommend Ubuntu for digipres newbies) - meaning there may still be nagging differences from Mac users (command line package management using "apt" instead of Homebrew for instance, although this too can be smoothed over with the addition of Linuxbrew). This little tutorial covers some of those, like mounting external drives into the WSL file system and how to get to your Windows files in the WSL.

You can follow Microsoft's instructions for installing Ubuntu on the WSL here (it may vary a bit depending on which update/version exactly of Windows 10 you're running).

That's it! To be absolutely clear at the end of things here, I've generally not worked with Windows for most digital/audiovisual preservation tasks, so I'm hardly an expert. But I hope this post can be used to get Windows users up to speed and on the same page as the Mac club when it comes to the basics of using their computers for digipres fun!