Color is Math

I know, I hate to break it to you. I'm not thrilled about it either - one of my favorite memories from high school is walking straight up to my Statistics teacher on the last day of senior year and proudly announcing that it was the last time I was ever going to be in a math class. (Yes, I do feel a bit guilty about the look of befuddled disappointment on his face, but by god I was right)

But it's true: at least when it comes to video preservation, color is math. Everything else you thought you knew about color - that it's light, that you get colors by mixing together other colors, that it's pretty - is irrelevant.

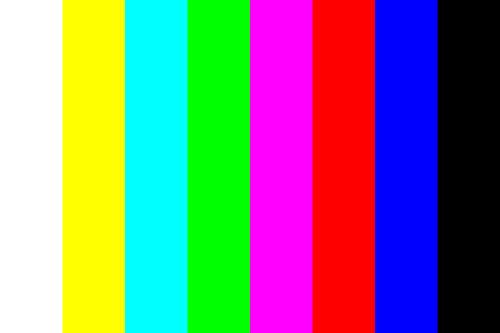

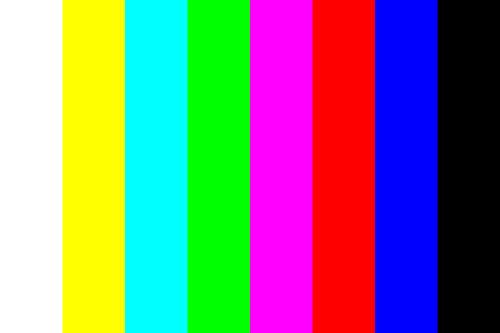

Just now, you thought you were looking at color bars? Wrong. Numbers.

When I first started learning to digitize analog video, the concept of luminance and brightness made sense. Waveform monitors made sense. A bright spot in a frame of video shows up as a spike on the waveform in way that appeases visual logic. When digitizing, you wanted to keep images from becoming too bright or too dark, lest visual details at these extremes be lost in the digital realm. All told, pretty straightforward.

Vectorscopes and chrominance/color information made less sense. There were too many layers of abstraction, and not just in "reading" the vectorscope and translating it to what I was seeing on the screen - there was something about the vocabulary around color and color spaces, full of ill-explained and overlapping acronyms (as I have learned, the only people who love their acronyms more than moving image archivists and metadata specialists are video engineers).

I'd like to sift through some of the concepts, redundancies, and labeling/terminology that threw me off personally for a long time when it came to color.

CIE XYZ OMG

Who in the rainbow can draw the line where the violet tint ends and the orange tint begins? Distinctly we see the difference of the colors, but where exactly does the one first blendingly enter into the other? So with sanity and insanity.—Herman Melville, Billy Budd

I think it might help if we start very very broad in thinking about color before narrowing in on interpreting color information in the specific context of video.

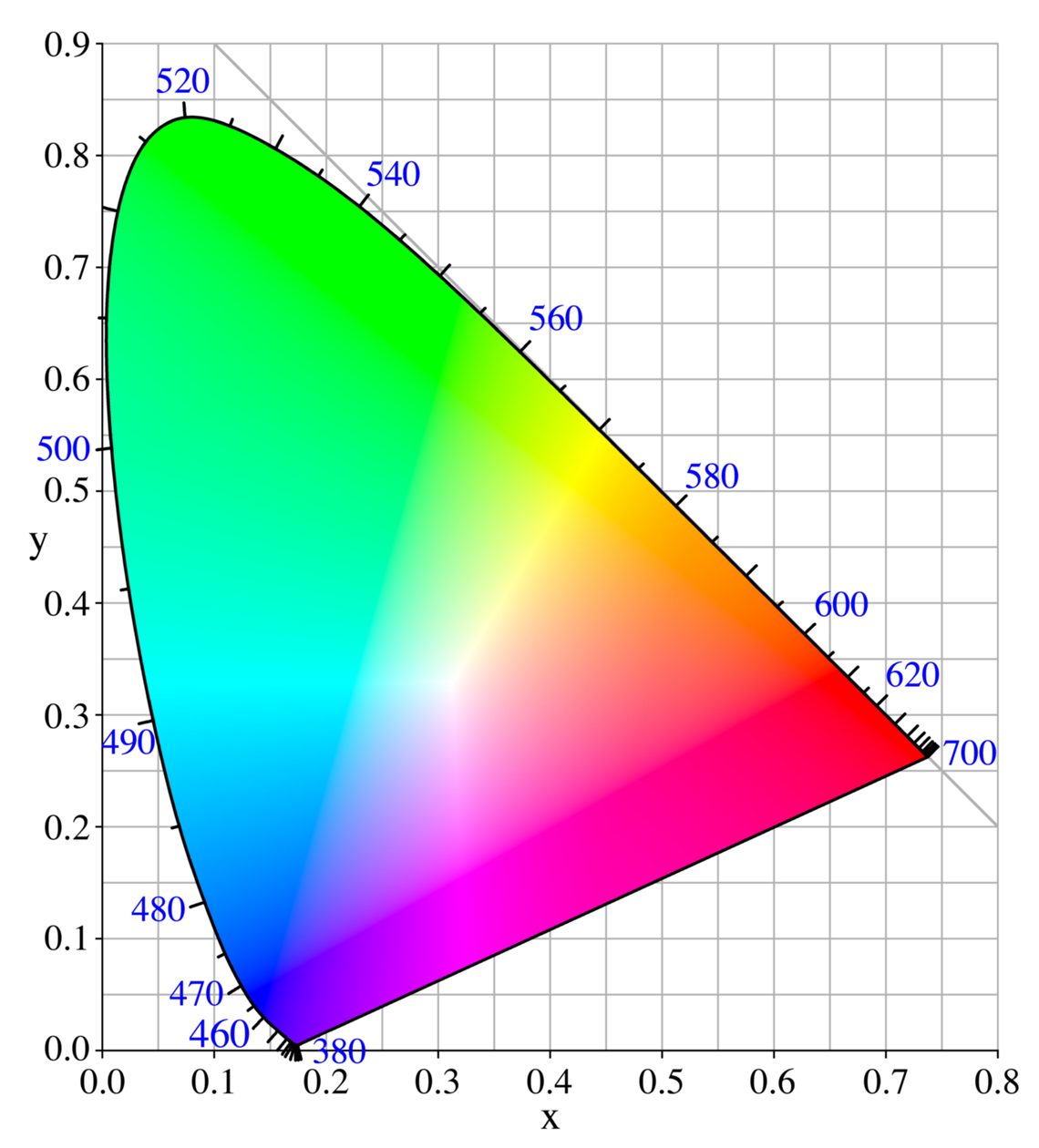

In the early 20th century, color scientists attempted to define quantitative links between the visible spectrum of electromagnetic wavelengths and the physiological perception of the human eye. In other words - in the name of science, they wanted to standardize the color "red" (and all the other ones too I guess)

Given the insanely subjective process of assigning names to colors, how do you make sure that what you and I (or more importantly, two electronics manufacturers) call "red" is the same? By assigning an objective system of numbers/values to define what is "red" - and orange, yellow, green, etc. etc. - based on how light hits and is interpreted by the human eye.

After a number of experiments in the 1920s, the International Commission on Illumination (abbreviated CIE from the French - like FIAF) developed in 1931 what is called the CIE XYZ color space: a mathematical definition of, in theory, all color visible to the human eye. The "X", "Y" and "Z" stand for specific things that are not "colors" exactly, so I don't even want to get into that here.

tl;dr your computer screen, compared to the mathematical limits of the natural world - sucks.

That's to be expected though! Practical implementations of any standard will always be more limited than an abstract model.

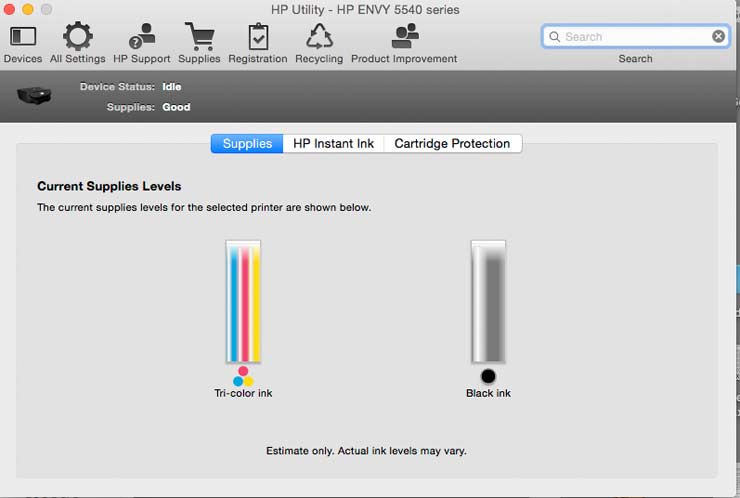

So even if it basically only exists in theory (and in the unbounded natural world around us), the CIE XYZ color space and its definition of color values served as the foundation for most color spaces that followed it. A color space is the system for creating/reproducing color employed by any particular piece of technology. Modern color printers, for example, use the CMYK color space: a combination of four color primaries (cyan, magenta, yellow, and "key"/black) that when mixed together by certain, defined amounts, create other colors, until you have hard copies of your beautiful PowerPoint presentation ready to distribute.

Again, just like CIE XYZ, any color space you encounter is math - it's just a method of saying this number means orange and this number means blue and this number means taupe. But, just like with all other kinds of math, color math rarely stays that straightforward. The way each color space works, the way its values are calculated, and the gamut it can cover largely depend on the vagaries and specific limitations of the piece of technology it's being employed with/on. In the case of CIE XYZ, it's the human eye - in the case of CMYK, it's those shitty ink cartridges on your desktop laser printer that are somehow empty again even though you JUST replaced them.

So what about analog video? What are the specific limitations there?

Peak TV: Color in Analog Video

Video signals and engineering are linked pretty inextricably with the history of television broadcasting, the first "mass-market" application of video recording.

So tune in to what you know of television history. Much like with film, it started with black-and-white images only, right? That's because it's not just budding moving image archivists who find brightness easier to understand and manipulate in their practical work - it was the same for the engineers figuring out how to create, record, and broadcast a composite video signal. It's much easier for a video signal to tell the electron gun in a CRT television monitor what to do if frame-by-frame it's only working with one variable: "OK, be kinda bright here, now REALLY BRIGHT here, now sorta bright, now not bright at all", etc.

Compare that to: "OK, now display this calculated sum of three nonlinear tristumulus primary component values, now calculate it again, and again, and oh please do this just as fast when we just gave you the brightness information you needed".

So in first rolling out their phenomenal, game-changing new technology, television engineers and companies were fine with "just" creating and sending out black-and-white signals. (Color film was only just starting to get commonplace anyway, so it's not like moving image producers and consumers were clamoring for more - yet!)

But as we moved into the early 1950s, video engineers and manufacturing companies needed to push their tech forward (capitalism, competition, spirit of progress, yadda yadda), with color signal. But consider this - now, not only did they need to figure out how to create a color video signal, they needed to do it while staying compatible with the entire system and market of black-and-white broadcasting. The broadcast companies and the showmakers and the government regulation bodies and the consumers who bought TVs and everyone else who JUST got this massive network of antennas and cables and frequencies and television sets in place were not going to be psyched to re-do the entire thing only a few years later to get color images. Color video signal needed to work on televisions that had been designed for black-and-white.

From the CIE research of the '20s and '30s, video engineers knew that both the most efficient and wide-ranging (in terms of gamut of colors covered) practical color spaces were composed by mixing values based on primaries of red, green, and blue (RGB).

But in a pure RGB color space, brightness values are not contained on just one component, like in composite black-and-white video - each of the three primary values is a combination of both chrominance and luminance (e.g. the R value is a sum of two other values that mean "how red is this" and "how bright is this", respectively). If you used such a system for composite analog video, what would happen if you piped that signal into a black-and-white television monitor, designed to only see and interpret one component, luminance? You probably would've gotten a weirdly dim and distorted image, if you got anything at all, as the monitor tried to interpret numbers containing both brightness and color as just brightness.

This is where differential color systems came into play. What engineers found is that you could still create a color composite video signal from three component primaries - but instead of those being chrominance/luminance-mixed RGB values, you could keep the brightness value for each video frame isolated in its own Y′ component (which Charles Poynton would insist I now call luma, for reasons of.....math), while all the new chroma/color information could be contained in two channels instead of three: a blue-difference (B′-Y′) and a red-difference (R′-Y′) component. By knowing these three primaries, you can actually recreate four values: brightness (luma) plus three chroma values (R, G, and B). Even though there's strictly speaking no "green" value specified in the signal, a color television monitor can figure out what this fourth value should be based on those differential calculations.

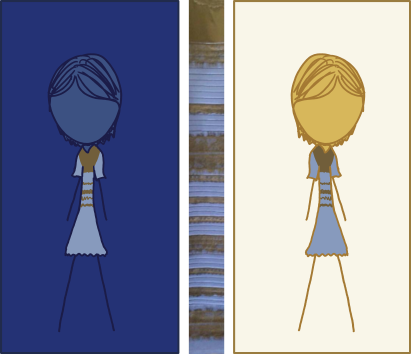

For television broadcasting, keeping the luma component isolated meant that you could still pipe a color composite video signal into a black-and-white TV, and it would still just display a correct black-and-white image: it just used the values from the luma component and discarded the two color difference components. Meanwhile, new monitors designed to interpret all three components correctly would display a proper color image.

This basic model of using two color difference components for video was so wildly efficient and successful that we still use color spaces based on this model today! Even as we passed from analog video signals into digital.

Lookup Table: "YUV"

...but you may have noticed that I just said "basic model" and "color spaces", as in plural. Uh oh.

As if this wasn't all complicated enough, video engineers still couldn't all just agree on one way to implement the Y′, B′-Y′, R′-Y′ model. Engineers working within the NTSC video standard needed something different than those working with PAL, who needed something different than SECAM. The invention of component video signal, where these three primaries were each carried on their own signal/cable, rather than mixed together in a single, composite signal, also required new adjustments. And digital video introduced even more opportunities for sophisticated color display.

So you got a whole ton of related color spaces, each using the same color difference model, but employing different math to get there. In each case, the "scale factors" of the two color difference signals are adjusted to optimize the color space for the particular recording technology or signal in question. Even though they *always* basically represent blue-difference and red-difference components, the letters change because the math behind them is slightly different.

So here is a quick guide to some common blue/red difference color spaces, and the specific video signal/context they have been employed with:

- Y′UV = Composite analog PAL video

- Y′IQ = Composite analog NTSC video

- Y′DBDR = Composite analog SECAM video

- Y′PBPR = Component analog video

- Y′CBCR = Component digital video - intended as digital equivalent/conversion of YPBPR, and also sometimes called "YCC"

- PhotoYCC (Y′C1C2) = Kodak-developed digital color space intended to replicate the gamut of film on CD; mostly for still images, but could be encountered with film scans (whereas most still image color spaces are fully RGB rather than color-difference!)

- xvYCC = component digital video, intended to take advantage of post-CRT screens (still relatively rare compared to Y′CBCR, though), sometimes called "x.v. Color" or "Extended-gamut YCC"

And now a PSA for all those out there who use ffmpeg or anyone who's heard/seen the phrase "uncompressed YUV" or similar when looking at recommendations for digitizing analog video. You might be confused at this point: according to the table above, why are we making a "YUV" file in a context where PAL video might not be involved at all???

As Charles Poynton again helpfully lays out in this really must-read piece - it literally comes down to sloppy naming conventions. For whatever reason, the phrase "YUV" has now loosely been applied, particularly in the realm of digital video and images, to pretty much any color space that uses the general B′-Y′ and R′-Y′ color difference model.

I don't know the reasons for this. Is it because Y′UV came first? Is it because, as Poynton lays out, certain pieces of early digital video software used ".yuv" as a file extension? Is it a peculiar Euro-centric bias in the history of computing? The comments below are open for your best conspiracy theories.

I don't know the answer, but I know that in the vast vast majority of cases where you see "YUV" in a digital context - ffmpeg, Blackmagic, any other kind of video capture software - that video/file almost certainly actually uses the Y′CBCR color space.

Gamma Correction, Or How I Learned To Stop Worrying and Make a Keyboard Shortcut for "Prime"

Another thing that I must clarify at this point. As evidenced by this "YUV" debacle, some people are really picky about color space terminology and others are not. For the purposes of this piece, I have been really picky, because for backtracking through this history it's easier to understand precise definitions and then enumerate the ways people have gotten sloppy. You have to learn to write longhand before shorthand.

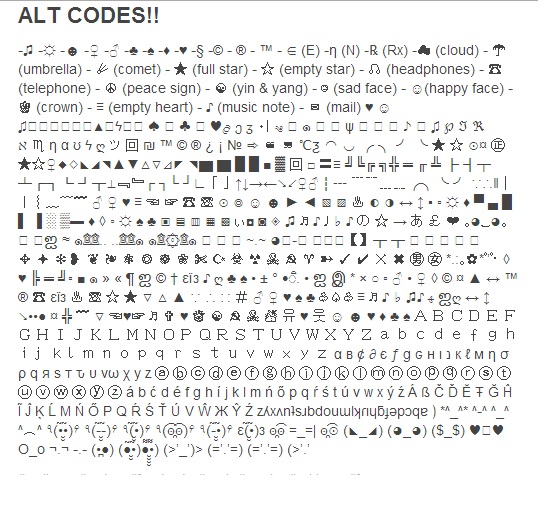

So you may have noticed that I've been picky about using proper, capitalized subscripts in naming color spaces like Y′CBCR. In their rush to write hasty, mean-spirited posts on the Adobe forums, you may see people write things like "YCbCr" or "Y Cb Cr" or "YCC" or any other combination of things. They're all trying to say the same thing, but they basically can't be bothered to find or set the subscript shortcuts on their keyboard.

In the same vein, you may have noticed these tiny tick marks (′) next to the various color space components I've described. That is not a mistake nor an error in your browser's character rendering nor a spot on your screen (I hope). Nor is it any of the following things: an apostrophe, a single quotation mark, or an accent mark.

This little ′ is a prime. In this context it indicates that the luma or chroma value in question has been gamma-corrected. The issue is - you guessed it - math.

The human eye perceives brightness in a nonlinear fashion. Let's say the number "0" is the darkest thing you can possibly see and "10" is the brightest. If you started stepping up from zero in precisely regular increments, e.g. 1, 2, 3, 4, etc., all the way up to ten, your eyes would weirdly not perceive these changes in brightness in a straight linear fashion - that is, you would *think* that some of the increases were more or less drastic than the others, even though mathemetically speaking they were all exactly the same. Your eyes are just more sensitive to certain parts of the spectrum.

Gamma correction adjusts for this discrepancy between nonlinear human perception and the kind of linear mathematical scale that technology like analog video cameras or computers tend to work best with. Don't ask me how it does that - I took Statistics, not Calculus.

The point is, these color spaces adjust their luma values to account for this, and that's what the symbol indicates. It's pretty important - but then again, prime symbols are usually not a readily-accessible shortcut that people have figured out on their keyboards. So they just skip them and write things like... "YUV". And I'm not just talking hasty forum posts at this point - I double-dare you to try and reconstruct everything I've just told you about color spaces from Wikipedia.

Which, come to think of it, the reason why "YUV" won out as a shorthand term for Y′CBCR may very well be just that it doesn't involve any subscripts or primes. Never underestimate ways programmers will look to solve inefficiencies!

Reading a Vectorscope - Quick Tips

This is all phenomenal background information, but when it gets down to it, a lot of people digitizing video just want to know how to read a vectorscope so they can tell if the color in their video signal is "off".

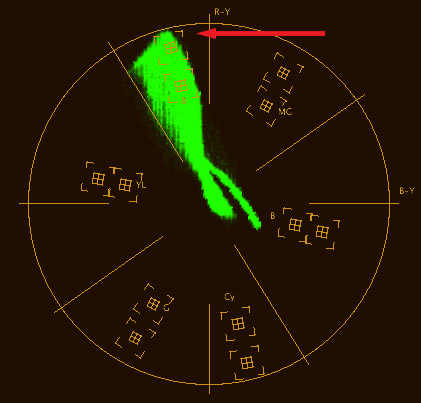

Here we have a standard SMPTE NTSC color bars test pattern as it reads on a properly calibrated vectorscope. Each of the six color bars (our familiar red, green, and blue, as well as cyan, yellow, and magenta) are exactly as they should, as evidenced by the dots/points falling right into the little square boxes indicated for them (the white/gray bar should actually be the point smack in the center of the vectorscope, since it contains no chroma at all).

The saturation of the signal will be determined by how far away from the center of the vectorscope the dots for any given frame or pixel of video fall. For example, here is an oversaturated frame - the chroma values for this signal are too high, which you can tell because the dots are extending out way beyond the little labeled boxes.

Meanwhile, an undersaturated image will just appear as a clump towards the center of the vectorscope, never spiking out very far (and a black-and-white image should in fact only do that!)

Besides saturation, a vectorscope can also help you see if there is an error in phase or hue (both words refer to the same concept). As hopefully demonstrated by our discussion of color spaces and primaries, colors generally relate to each other in set ways - and the circular, wheel-like design of the vectorscope reflects that. If the dots on a vectorscope appear rotated - that is, dots that should be spiking out towards the "R"/red box, are instead headed for the "Mg"/magenta box - that indicates a phase error. In such a case, any elephants you have on screen are probably going to start all looking like pink elephants.

The problem is, even with these "objective" measurements, color remains a stubbornly subjective thing. Video errors in saturation and phase may not be so wildly obvious as the examples above, making them a tricky thing to judge, especially against artistic intent or the possibility of an original recording being made with mis-calibrated equipment. Again, you can find plenty of tutorials and opinions on the subject of color correction online from angry men, but I always just tell everyone to use your best judgement. It's as good as anyone else's!

(If you do want or need to adjust the color in a video signal, you'll need a processing amplifier.)

Pixel Perfect: Further Reading on Color

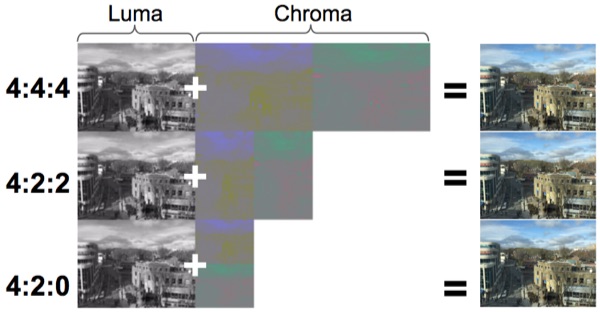

I've touched on digital video and color here but mostly focused on pre-digital color spaces and systems for audiovisual archivists and others who work with analog video signals. Really, that's because all of this grew out of a desire to figure out what was going on with "uncompressed YUV" codecs and "-pix_fmt yuv420p" ffmpeg flags, and I worked backwards from there until I found my answers in analog engineering.

There's so much more to get into with color in video, but for today I think I'm going to leave you with some links to explore further.

From the master of pixels, Charles Poynton:

- ColorFAQ

- GammaFAQ

- Digital Video and HD, Second Edition (a dense textbook, but basically the current authoritative resource on digital video)

On the subject of chroma subsampling:

- "Colors, Luminance, and Our Eyes" from Leondro Moreira's "Digital Video Introduction" (a phenomenal introduction/companion to Poynton's textbook)

- the actually relatively understandable Wikipedia entry

- http://www.red.com/learn/red-101/video-chroma-subsampling (has some nice interactive demonstrations of subsampling comparison)

On the subject of other color spaces beyond Y′CBCR

- openYCoCg An experimental video codec from AV Preservation by Reto Kromer, based on the Y′COCG color space, which can convert to/from RGB values but isn't based on human vision models (using orange-difference and green-difference instead of the usual blue/red)

- Digital Photography School: sRGB versus Adobe RGB